Deep Deterministic Policy Gradient

Jump straight to the code -> https://github.com/samlanka/DDPG-PyTorch

Deep reinforcement learning has observed a relative spike in recent years. It combines the magic(or alchemy, as you choose) of deep neural networks with concrete control optimizations of dynamical systems, enabling agents to learn by trial and error. RL differs from supervised learning because the supervision is not determined by the final output alone; it is rather controlled by a reward signal which serves as a score for the sequence of actions leading to the final output. Hence, we are indirectly supervising the actions the agent takes in its attempts to address the task at hand. Reward signal specification is a fascinating open area of research. For the purpose of this article however, we will leave the intricacies of reward signal theory for another day. We will assume that the agent receives a small positive reward for completing the task and zero otherwise.

The land of deep reinforcement learning is generally split into two camps: 1) Value-function approximators 2) Policy gradient methods

Value-function approximators assign every state in the environment a “value”, a measure of how much reward the agent can collect from that state onwards. In other words, how close is the agent to finishing the task? E.g. In a game of tic-tac-toe if we consider the agent playing "crosses", State 1 in the figure below is an undesirable low value state while State 2 depicts a high value state since the agent has won the game. Fun fact: Tic-tac-toe has a total of 765 valid states.

One step deeper, we find Q-value function approximators. Instead of evaluating each state, these models assign a value to each state-action pair, thereby providing us with extra information that given a state, what are the expected returns from each of the possible actions we can take in that state. As we see in the following picture, given the state S, the ideal action for a "crosses" agent would be to place a cross in tile 9 and seal the win. Hence the Q-value Q(S, 9) has a high value while all other state-action pairs of S have low Q-values because a possible nought in tile 9 in the next step will result in a loss.

As you might have guessed, the ideal action to take in any state is the one associated with the highest Q-value. The more accurate the Q-value estimates for each state-action pair are, the better the agent can exploit that knowledge and take the best action. Through repeated interactions with the environment, the agent records the returns it observes as a function of its visited states and actions and updates its Q-values. This is known as Q-learning. In the case of continuous state spaces, we use function approximators to generate Q-values and generalize to unseen states. If the function approximator happens to be a neural network, we call the model Deep Q-Network and use it to achieve superhuman performance in Atari.

In terms of probability, we would like the action with the greatest Q-value be assigned the largest probability of being chosen given a state.

Value function methods have two steps; policy evaluation(prediction) followed by policy improvement(control). Policy gradient methods cut out the need for policy evaluation like Q-learning by using function approximators to directly model the probability distribution over actions given a state. Hence policy gradient methods directly output the policy required for the task with the caveat that policy gradients require far greater number of iterations and are generally unstable. They do however, have a significant advantage when the ideal policy is a stochastic policy or as in our case of interest, when we are dealing with continuous action spaces. The argmax over a continuous action space from the above equation can simply be computed through a policy network.

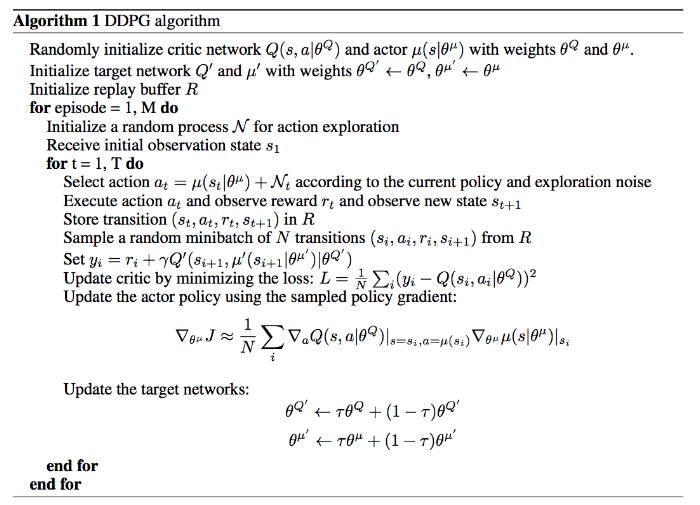

This is the core idea behind the Deep Deterministic Policy Gradient algorithm from Google DeepMind. DDPG scales the DQN algorithm to continuous action spaces by using a policy neural network. Two networks participate in the Q-learning process of DDPG. A policy neural network called actor provides the argmax of the Q-values in each state. A value neural network called critic evaluates the Q-values of actions chosen by actor. The interplay between the actor and critic is that the actor tries to maximize the output of the critic and the critic tries to equate the value of the actor output to the returns observed in real life.

Source: Lillicrap, Timothy P., et al. "Continuous control with deep reinforcement learning."

An obvious utility of DDPG is to solve tasks involving continuous control where both the state space and action space are continuous, like robotics. DeepMind Control Suite is a library which provides simulated continuous control environments to benchmark and evaluate RL algorithms.

My open-source implementation of DDPG for DM Control Suite environments using PyTorch is available here: https://github.com/samlanka/DDPG-PyTorch

(Prerequisites: Python 3, CUDA 9, Mujoco, PyTorch)

By changing the 'ENVNAME' and 'TASKNAME' variables in main.ipynb, we can play around with different test environments and their associated tasks. Given below a complete list of all the available environments and their tasks.

Suppose we wish to employ DDPG to teach a 2 DoF arm to reach a target spot. The environment for this task is 'reacher'; let's observe how the agent learns to perform the 'hard' version of this task. The actions are the relative angular displacements and velocities of the arm joints. The state space is comprised of many variables that characterize the position and velocities of all the objects in the scene. For every time step the end effector of the arm touches the red target, the agent receives a small positive reward.

At episode 20, the agent chooses random actions, exploring the state space and figuring out how the states in which it observes a reward are correlated with each other.

By episode 500, the agent has an idea of the task it has been assigned as it hovers around the target spot, on occasion gathering reward. It is yet to realize that it has to reach AND STAY at the target.

After 1000 episodes, the agent knows exactly what is expected of it.

DDPG cannot as of yet translate to actual robots or successfully complete tasks with large state spaces which holds abundant promise for exciting future research.

Bonus reading:

An Outsider's Tour of Reinforcement Learning